Surgery, Gastroenterology and Oncology

|

|

Artificial intelligence (AI) canbeusedtoimprovesurgicalservices at allstages, preoperative, intra-operative, and post-operative. In the pre-operative stage, AI canbeusedtodetect a disease, classifydiseases, segment theresults of radiologicalexaminations, facilitate theprocess of registeringpatient data, provideadvice in decision-making, andprovideprognosispredictions for theresults of surgicalprocedurestobeperformed. At the intra-operative stage, AI canbeusedto generate 3D reconstructionsduringthesurgicalprocess, improvenavigationcapabilitiesduring endoscopic procedures, providetissuetrackingfeatures, enabletheuse of augmentedrealityduringsurgery, andimprovetheefficiency of surgicalrobots in minimallyinvasivesurgeries. In thepostoperativestage, theuse of AI canmainlybeused in theautomatedprocessing of electronic healthrecords data.

Introduction

The concept of Artificial Intelligence (AI) originatedfromtheresearchconductedby Alan Turing, although John McCarthywasthefirsttocointhistermduringtheDartmouthSummerResearch Project in 1956 (1). Artificial Intelligence istheoutcome of mergingnumericalcomputationswith computer assistanceto generate intelligence. Authorsoftenarguethat AI generates computer-generatedsimulationswiththreemainobjectives: analysis, comprehension, andprediction (2). Anotherdefinitioncharacterises AI as machinesthat operate in a proactiveandsuitablemanner (3). Takingthesedefinitionsintoaccount, it is fair tosaythat AI referstotheutilisation of a computer toanalyse data, makedecisions, or aid in completingtasks.

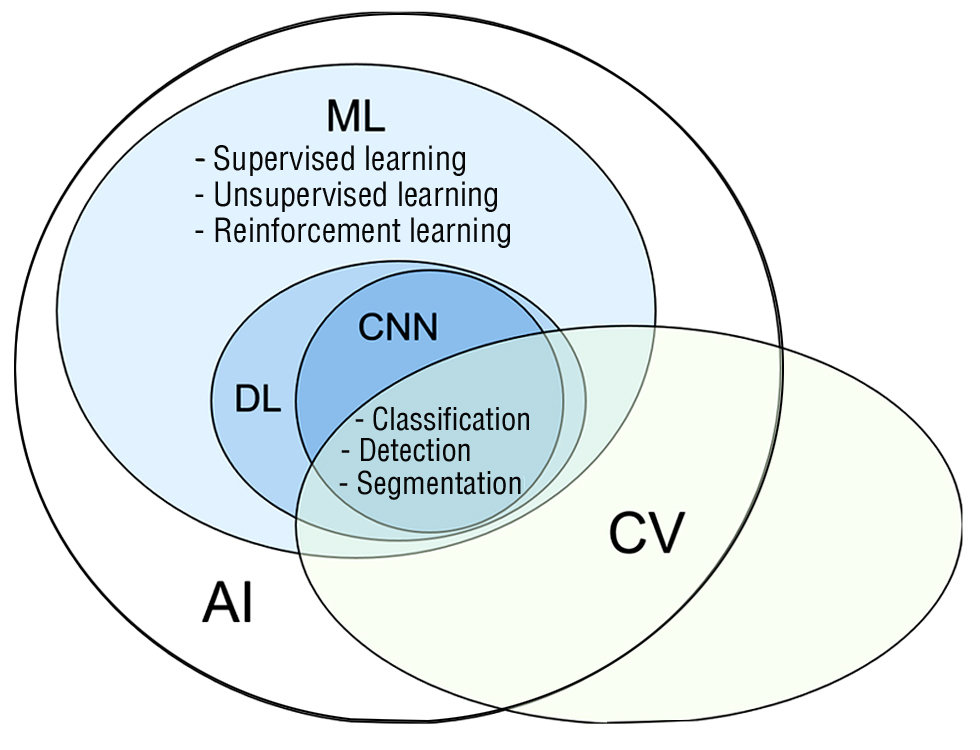

The word AI hasgrowndifficult as it supplantstechnicalexpressionssuch as machinelearning. AI is an expansive classificationthatencompassessubfieldssuch as machinelearning, whichinvolvesmethodslike neural networksanddeeplearning. Fig. 1depicts an AI taxonomythatelucidatestheconnectionsbetweenvarioussubjects. The varioussubfieldsmentioned are interconnected, anddepending on the specific use case, techniquesfromonesubfieldcanbemergedwith or incorporatedintoanother (4).

Figure 1 - Basic terminology in AI. AI – artificial intelligence;

ML - machinelearning; DL - deeplearning;

CN - convulational neural network; CV - commputervision

Classification of AI

Machinelearning

Machinelearning (ML) isthefield of researchthatfocuses on developingandutilisingstatisticalmodelsandtechniquestoenablemachinestoacquireknowledgeandperformtasks. Machinelearningmethodsutiliseinherentqualities or attributeswithinthe data toperformtasks, withouttheneed for explicit programming. Typically, thesetasks are dividedintotwo distinct categories: thosethatinvolveregression (i.e., creating a model tounderstandtherelationshipbetweencontinuousvariables) andthosethat include classification (i.e., dividing data intodifferentgroups). In conventionalmachinelearning, humanattributes are manuallyselected or generatedtoguidethealgorithms in evaluating specific aspects of the data duringanalysis. On theother hand, neural networksautomatically extract traits, whichwillbeexploredlater (4).

Supervisedandunsupervisedlearning are theprimarymodalities of machinelearning. Supervisedlearningis training an algorithmtomakepredictionsbased on a specified output. Thisprocessnecessitatestheuse of labelled data setsthat are dividedinto training and test sets for evaluation (5). On theother hand, unsupervisedlearningincludesidentifyingpatterns in data thatdoesnothaveanypredeterminedannotations. It hasthecapabilityto discern connectionsbetweengroups, such as clustering, and produce hypotheses for subsequentresearch. Thiscanbeappliedto more specific data sets, such as surgicalmotionandactivity, as well as to more general surgical data, such as patientoutcomesdatabases. Unsupervisedlearninghasbeenutilised, for example, toautomaticallydetectsuturingmovements in surgicalrecordingsandtoidentifypatientsundergoingheartsurgerywith a highrisk of complications (6,7).

ReinforcementLearning (RL) is an unsupervisedlearningmethodthatbelongstothethirdcategory of learning. It canbelikenedto operant conditioning, wherethe model learnsbyrepeatedlyattemptingdifferentactions, withrewardsandpunishmentsinfluencingthemodel'sbehaviourtomaximiserewards (5,8).

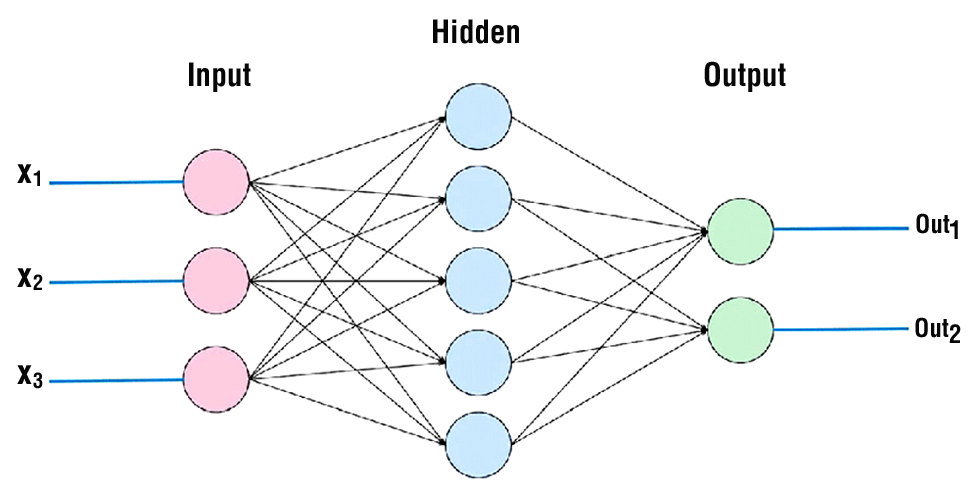

Artificial neural networks

In traditionalmachinelearning, features, alsoknown as variables, are manuallychosenby an individual tooptimise performance for a certain task. Whiskersandpointyearsmightberegarded as meticulouslydesignedcharacteristics in a task of recognising a cat. Neural networks, drawinginspirationfrom organic nervoussystems, employlayers of fundamental computer unitsdesignedto mimic neurones for thepurpose of analysing input (fig. 2). Unlike standard machinelearning, neural networkshavetheabilityto extract featuresfrom data andutilisethem as inputs. Subsequently, thesystemcanadjusttheweights of thefeaturestobeutilisedwithin an activationfunction, therebygenerating an output (9). In essence, thesystemautonomouslyalterstheweightstoenhance or diminishconnectionswithinthenetwork, aimingtoachieve optimal results throughpredeterminedmathematicalalgorithms.

Figure 2 - Three-layer of neural network

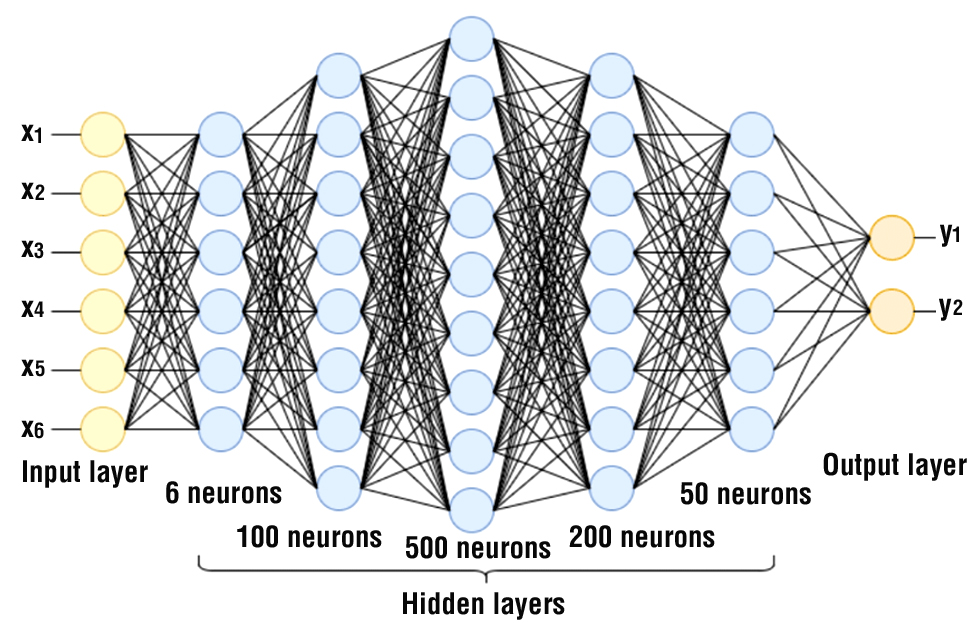

Deep learning

Deep neural networks are neural networkswiththree or more layers, allowingthemtolearn complex patternsthatcannotbeobserved in simple one- or two-layernetworks. Liketraditional neural networks, deeplearningselectsfeaturesbased on theirprobability of yieldingthehighestresults. Thisapproachishighlyefficient for handlingunorganised data, such as images, videos, and audio. In a deep neural network, eachlayerperforms a set of operationsto generate a representation of the input, whichisthenpassed on tothenextlayer (10). Increaseddepth in networklayersresults in thecreation of more abstract data representations, evenwhile it enhancesthedistinction of data classes (11). Currently, convolutional neural networks, recurrent neural networks, andresidual neural networks are commonlyemployeddeeplearningarchitectures in surgicalapplications.

Applications of Artificial Intelligence

The previouslystatedmethodshavedemonstratedsignificantpotential in diverse domains of artificial intelligence. Natural languageprocessingand computer visionhavegainedsignificantpopularity in thefield of medicine, especially in surgery.

Computer vision

Computer Vision (CV) is a branch of artificial intelligence thatfocuses on theanalysisandunderstanding of imagesandvideosusingmachinelearningtechniques (5). It involvesvariousprocessessuch as imageprocessing, pattern recognition, and signal processing (fig. 3; it is important tohighlightthatalthough CV doesnotencompassreinforcementlearning, thisis just a situationwherethetwofieldsoverlap). It involves a systemthatintegrates data fromthe individual pixels of an image, detectsobjects in theimage, andpotentiallyexaminestheemptyspacesinsidetheimage. Bycombiningthesecomponents, it ispossibleto construct advancedapplications, such as autonomousdrivingsystemsthatutilisecomputerstoidentifyitemssuch as trafficsignals, pedestrians, and open roadways. Convolutional neural networks (CNNs) havealsodemonstratedsignificantadvantages for CV tasks (4).

Figure 3 - Ilustration of deeplearning

Withtheincreasingavailability of visualsurgical data, thefield of CV isfinding more and more applications in surgery. Withtheincreasinguser-friendliness of laparoscopic, endoscopic, and robotic camera systems, as well as thedecreasing cost andbiggerstoragecapacity, an increasingnumber of surgeons are choosingto document theirprocedures for the sake of research, teaching, andeducation (4).

Natural languageprocessing

Natural LanguageProcessing (NLP) involvesnotonlytheidentification of vocabulary, but alsothedevelopment of machinesthatcanunderstandhumanlanguage. Thisentailscomprehendingsynonyms, antonyms, definitions, andotherinterconnectedfacets of language. In theabsence of NLP, computers are limited tointerpretingmachinelanguages or code (such as C, Java, and Visual Basic) andexecutinginstructionsthathavebeenexplicitlywrittenandcompiledinto an output. NLP enablesmachinestohave a basic understanding of humanlanguage as it iscommonlyutilised in everydaysituations. The goalistounderstandthestructureandmeaning of phrases, sentences, or paragraphsbyfocusing on syntaxandsemantics (12).

NLP isemployed for theanalysis of electronic medical recordsandthecompletion of dictationdutiesperformedbyhealthcarepersonnel. The capability of NLP toanalyse specific types of humanlanguageallows for the automatic evaluationandorganisation of unstructuredfree text, such as radiologyreports, progressreports, andoperation notes. For example, sentiment analysis in patient notes canbeutilisedto anticipate a patient'shealthcondition, while record analysiscanbeemployedtoforecasttheprobability of cancer in a patient (4).

Artificial Intelligence in preoperative

Detection

Regions of interest are identifiedandlocated in spacethroughtheprocess of detection, whichmayalso include classifyingtheregions or theentireimage. Regions of interest are commonlydepictedusingboundingboxes or landmarks. Similarly, techniquesutilisingdeeplearninghaveshown promise in detectingvariousanomalies or medical conditions. Regressionlayers are employedto determine theparameters of thebounding box, whereasconvolutionallayers are commonlyutilised in Deep Convolutional Neural Network (DCNN) for thepurpose of detection (13).

A deepconvolutionalautoencoderwastrained on 4D positron-emissiontomographypicturesto extract bothstatisticalandbiologicalinformation. The training aimedtodetect prostate cancer byanalysingthe data extractedfromtheimages (14). In the case of diagnosingpulmonarynodules, it wasrecommendedtouse a 3D CNN withroto-translationgroupconvolutions (15). The devicehasexcellentsensitivity, accuracy, andconvergencespeed.

The utilisation of dynamic contrast-enhanced MRI wasemployedto formulate a searchpolicywiththeobjective of findingbreastlesions. The deep Q-networkwasexpanded, andsubsequently, Deep ReinforcementLearning (DRL) wasutilisedtoacquireknowledge of thesearchpolicy (16). Lee et al. utilised an attentionmapand an iterative processto imitate theworkflow of radiologists, aimingtodetect acute cerebral bleedingfrom CT scansandimprovetheinterpretability of thenetwork (17).

Classification

The input, comprisingone or more medical pictures or volumes of organs/lesions, undergoesclassificationtoascertainits diagnostic significance. Deep learning-basedapproaches are becomingincreasingly popular, alongside standard machinelearningandimageanalysistechniques (18). Thesesystemsutiliseconvolutionallayersto extract input informationandfullyconnectedlayersto determine the diagnostic value.

An example of a classificationpipelinewaspresentedto segment bladder, breast, and lung tumoursusingGoogle'sInceptionandResNetarchitecture (19,20). ChilamKurthy et al. showedthatdeeplearningcanidentify cerebral haemorrhage, midlinedisplacement, calvarialfracture, and mass effectfromhead CT scans. Recurrent neural networks (RNNs) are more precise in predictingpostoperativehaemorrhage, death, and renal failure in patientsreceivingcardiosurgical care comparedto standard clinicalmethods. Thispredictionisdone in real time (21). ResNet-50 and Darknet-19, whichhave similar sensitivity but improvedspecificity, are usedtodetectwhethertumours in ultrasoundimages are benign or malignant (22).

Segmentation

Segmentationcategorizespixels or voxels in an image. Previously, computationrequireddividingimagesintosmallerpanes. CNNspredictedthetargetlabel at eachwindow'scenter. ManywindowswerefedintotheConvolutional Neural Networktopartition an image or voxel. DeepMedicidentifiedandseparatedbraintumorsfrom MRI scanswell (23). Duetoongoingnetworkfunctioncomputation in areaswithseveraloverlappingwindows, slidingwindow-basedmethods are wasteful. FullyConvolutionalNetworkssucceeded it. Byreplacingfullyconnectedlayerswithconvolutionalandupsamplinglayers in a classificationnetwork, FCNsimprovesegmentationefficiency (24). U-Net andotherencoder-decoder networkshaveshownpromising medical imagesegmentationoutcomes (25,26). Theseencodersusemanyconvolutionalanddownsamplinglayersto extract visual data at differentsizes. The decoder'sconvolutionalandupsamplinglayersrecoverfeaturemapspatialresolutionto segment pixelsandvoxelsaccurately. Zhouand Yang analyzenumerousnormalizationmethodsusedtotrain U-Net models for medical picturesegmentation (27).

Registration

Registrationreferstotheprocess of spatiallyaligningtwo medical volumes, modalities, or images. It isparticularlyessential for organisingboththe pre- andintraoperativeoperations. Historically, medical imageregistrationalgorithmshavetypicallyrelied on iterative optimisation of a parametric transformation in ordertominimise a givenmeasure. Deep regressionmodels are supplantingoptimization-basedregistrationprocesses, such as meansquareerror or normalisedcross-correlation, in ordertoprovidequickerand more effectiveprocessing of medical input (13).

Decision aids

Decision aids include background data, diagnosisandtreatmentalternatives, prosandcons, andprediction of outcomes for specific patientpopulations. A systematicanalysis of 31,043 patientswhohadtomake screening or treatmentdecisionsindicatedthatdecision aids improvedinformedand active participation (28). A comprehensive study of 17 surgicalpatienttrialsfoundthatdecision aids boostedtreatmentawarenessanddesire for lessinvasiveprocedures. Death, morbidity, quality of life, andanxietywerenotsignificantlydifferent (29). Decision aids are createdfor differentpatientgroupswith a single clinicalpresentation or option, hencethey do notaccount for individual patients' physiologyandriskfactors.

Prognostic scoringsystems

Prognostic scoringsystemsutiliseregression modelling toanalyse data frompatientgroups in ordertoidentifyriskfactors for individual patients. An elevation in theconcentration of C-reactive

protein (CRP) in thebloodstreamfollowingcolorectalsurgeryisassociatedwiththeoccurrence of anastomotic leak. Based on a meta-analysis, the optimal cutoffvalue for C-reactive protein (CRP) on thethirddayfollowingsurgeryisdeterminedtobe 172 mg/L (30). Nevertheless, thistechniquelacks complete accuracy as it failsto reflect theunderlyingpathology. C-reactive protein (CRP) levelsvaryalong a rangeand are directlylinkedtothepresence of inflammation, with a reasonablystable period of time it takes for thelevelstodecreaseby half. Following a colectomy, medical expertsutilise CRP levels as a means of identifyinganypotentialissues. However, thediagnosis of a problem doesnotrely on the CRP levelsbeingabove or belowthe 172 mg/L criterion (31). A CRP levelbelowthecutoffoftenindicatestheabsence of problems, with a negative predictive value of 97%. Nevertheless, thepositive predictive valueis at a mere 21%, suggestingthat a high CRP leveldoesnotdefinitively indicate a postoperative problem (32-34).

Prognosticationscoringsystemsmayinvolve a widerange of criteria. Thesesystems are usedtoforecaststrokeandserious gastrointestinal bleeding, as well as toassesstheseverity of an illness. The reason for thisisthatmostdiseases are notattributedto a single physiological factor (32-34). Regressionanalysis, whichisused in prognostic scoringsystems, assumes linear relationshipsbetween input variables (35,36). However, in cases of non-linear relationships, thescoringsystembecomes as unpredictable as flipping a coin (37).

Prognostic scoringsystemshavebeenintegrated as digital toolsto calculate riskandassistwithclinicalapplication. An example of a widelyrecognisedtoolistheNQIPSurgicalRisk Calculator. The utilisation of calculatorsmayincreasethelikelihood of patientsadoptingrisk-reductionstrategiessuch as prehabilitation. However, furtherdevelopmentisnecessary as the input variablesneedtobemanuallyprovidedandthepredictedaccuracyis suboptimal, especially for non-elective procedures.

Artificial Intelligence in intraoperative

Instantiating 3D shapes

3D reconstructioncanbeperformedusing MRI, CT, or ultrasoundimagingduringsurgery. An applicationcanbeutilisedto generate a three-dimensional surgicalenvironment in real-time, hencediminishingthequantity of photographsrequired for three-dimensional reconstruction. In addition, improvedtechniquescanboosttheclarity of thereconstructionevenfurther. A developingarea of researchinvolvesgenerating a real-timerepresentation of a 3D shapeduringsurgeryusingonlyone or a few 2D images (13).

For instance, a 3D prostate shapewasconstructedbyutilising a radial basisfunctionand multiple nonparallel 2D ultrasoundimages (38). Similarly, the 3D shapes of stentgrafts in differentstates (fullydeployed, fullycompressed, andpartiallydeployed) weregeneratedthroughmathematical modelling, a reliable perspective-n-pointapproach, graftgapinterpolation, andgraph neural networks (39-41). Theseshapeswerecreatedfrom a single 2D fluoroscopyprojectionusing a techniquecalledinstantiation (42). Toimprovetheefficiency of theframeworkusedto create theshapesandautomatically segment markers on stentgrafts, a focused U-Net withequalweightingwasproposed. A 3D model of an Abdominal Aortic Aneurysm (AAA) wascreatedusingskeletondeformationandgraphmatchingtechniqueswithonlyone 2D fluoroscopyprojection (43). Threemathematicaltechniques, Principal Component Analysis (PCA), StatisticalShape Model (SSM), andPartialLeast Square Regression (PLSR), wereusedto create a 3D shape of a liverfrom a single 2D projection (44). A framework for shapeinstantiationwithoutregistrationwasdevelopedandexpandedto include sparse PCA, SSM, andkernel PLSR (45). A newtechniqueusingdeepandone-stagelearninghasbeendevelopedto create 3D shapes. Thismethodenablesthecreation of a three-dimensional pointcloudusingonlyonetwo-dimensional image (46).

Endoscopic navigation

The prevailingdirection in surgeryisincreasinglyshiftingtowards endoscopic andintraluminalproceduresthatdepend on prompt identificationandintervention. An evaluationhasbeenconducted on thecapacity of navigationsystemstoguidethemovement of endos-copestowards particular locations. Depthestimation, visualodometry, andSimultaneousLocalisationandMapping (SLAM) techniqueshavebeenspecificallydesignedtoenable camera localisationandenvironmentmappingusing endoscopic images (13).

Accuratedepthestimationfrom endoscopic picturesisessential for mappingthe 3D structural

environmentandestimatingthe 6 degrees of freedom camera movements. Thishasbeenachievedthroughtheuse of self-supervised or superviseddeeplearningtechniques (47-50).

Visual odometryis a processthatdeterminesthepositionandorientation of a camera thatis in motionbyanalysing a sequence of video frames. CNN-basedalgorithmswereemployed for camera poseestimation, utilising temporal information. The evaluation of visualodometry-basedlocalisationtechniqueswas limited to lung phantomand GI tract data (51,52).

Navigationrequires real-time 3D reconstructionandlocalization of surroundingtissueduetotissuedynamics. SimultaneousLocalisationandMapping (SLAM) is a well-studiedroboticsapproach. UsingSimultaneousLocalizationandMapping (SLAM), the robot canproperly locate the camera on itsmap. Additionally, it can create a three-dimensional image of itssurroundings. Traditional SLAM approachesassume a rigid environment, whichisnottrue in a surgicalsettingwhere soft tissuesandorgansmayflex. Thus, themisconceptionlimitstheuse of thistechnology in surgery. Mountney et al. examinedhowbreathing-induced soft tissuemovementaffects SLAM estimate using a stereoendoscopeand EKF-SLAM (53). Monocular EKF-SLAM wasusedtoassess hernia anomaliesduring hernia repairsurgeryby Grasa et al (54). Turan et al. calculated RGB depthimagesusingformfromshading. Later, theydeveloped RGB D SLAM employing RGB anddepthimages (55). Song et al. developed a CPU-based ORB SLAM and a GPU-based dense deformable SLAM toimprovestereoendoscopelocalizationandmapping (56).

Tissuefeaturetracking

Minimal InvasiveSurgeryuseslearningmethods for soft tissue monitoring. Mountneyand Yang created an online learningsystemthatusesdecisiontreecategorizationtopinpoint relevant traits (57). The featuretrackerisupdated over timeusingthismethod. Ye et al. identifiedandfocused on GI soft tissuesurfaces. Theyused an online randomforestand a structured SVM for this (58). Wang et al. used a statisticalappearance model in theirregion-based 3D trackingsystemtodifferentiateorgansfrom background (59). The validationfindingsrevealedthatlearningalgorithmscanimprovetissuetrackingrobustnesstodeformationsandilluminationchanges.

Augmentedreality

AugmentedReality (AR) overlays a partially transparent preoperativeimageontothe focal areatoimprovesurgeonvision (60). Wang et al. used a projectorto display the AR overlayduring oral andmaxillofacialsurgery, accordingtotheirarticle (61). Toalignthe virtual imageandteeth, 3D contourmatchingwasused. Instead of projectors, Pratt et al. usedHololensto display a 3D vascular model on patients' lowerlimbs (62). For AR navigation, Zhang et al. devised a frameworkthatautomaticallyregisters 3D deformabletissue. Theydidthisusing Iterative Closest Point andRandomSampleConsensus (63). Projectingtheoverlay over markerlessdeformableorgansistough, thereforethiswas crucial.

Robotics

Artificial intelligence hasenhancedtheefficiency of surgicalrobots in MinimallyInvasiveSurgeries (MIS). The objectiveistoenhancetheirperception, decision-making, andfocusedactivities (63,64). The primaryareas of emphasis for AI techniques in Robotic andAutonomousSystems (RAS) include perception, localisationandmapping, system modelling and control, andhuman-robot interaction.

Artificial Intelligence in thepostoperative period

Automated electronic healthrecords data

The Health Information Technology for Economic andClinicalHealth Act of 2009 promotedtheadoption of Electronic Health Records (EHR) systems (65). Within a span of lessthan 6 years, over 80% of US hospitalssuccessfullyimplementedEHRs (66). Thesesystems generate a substantial volume of data, whichisexpectedto continue increasing in thefuture. In 2013, around 153 billiongigabytes (GB) of data weregenerated, and it isprojectedtoexpandannuallyby 48% (67). Thislargeamount of data is ideal for artificial intelligence modelsthat are designedtohandle big datasets.

Artificial intelligence modelspossessthecapabilityto generate real-timeforecastsandsuggestionsduetothe automatic updating of Electronic Health Records (EHRs) whenfreshpatient data isaccessible. Recent publications demonstrate theviability of thisapproach. The MySurgeryRiskplatformutilises EHR data on 285 variablestoforecast 8 potentialcomplicationsaftersurgery. The platformachieves an areaunderthe curve (AUC) rangingfrom 0.82 to 0.94 for thesecomplications. Additionally, it predictsthelikelihood of mortalityafter 24, 36, and 1 yearwith an AUC rangingfrom 0.77 to 0.83. The programmereceives data from electronic healthrecordsautomatically, eliminatingtherequirement for human data entryandsearch. Thisremoves a significantobstacletoclinicalapplication. In a prospective study, thesystemdemonstrated superior accuracy in identifyingpostoperativedifficultiescomparedtodoctors (68).

Conclusion

AI istheoutcome of mergingnumericaloperationswith computer aidto generate intelligence. AI canbeconsidered as a broaderumbrellatermthatencompassessubfieldssuch as machinelearning, which in turn includestechniqueslike neural networksanddeeplearning. Natural languageprocessingand computer vision are widelyutilised in thefield of medicine, with a particular emphasis on theirapplication in surgicalprocedures. AI canassist in preoperative, intraoperative, andpostoperativestages of surgery. AI canassist in preoperativeproceduresbyaiding in thediagnosisandfacilitatingsurgicaldecision-making. Duringsurgery, AI mayassistus in severalways, includingcreatingthree-dimensional (3D) models of shapes, guiding endoscopic navigation, trackingtissuefeatures, implementingaugmentedreality (AR), andcontrolling robotic systems. Artificial intelligence canbeutilisedto automate electronic healthrecords in thepostoperativesetting. Although AI developmentshave made it possibletoprovidesurgicalassistance, there are somechallengesthat must besolvedfrom a practical perspective. It isnecessarytoredevelop data availability, data annotation, data standardisation, technicalinfrastructure, interpretability, safety, monitoring, ethics, and legal considerations. As a result, surgeons must bepreparedtonotonlyembracethistransformation but alsoactively participate in itsdevelopmentandimplementation.

Conflict of interest

Authorshaveno conflict of interest in thisarticle.

Funding

Thisreviewarticlereceivesnofunding.

References

1. Kolanska K, Chabbert-Buffet N, Daraï E, Antoine J. Artificial intelligence in medicine: a matter of joy or concern? J GynecolObstetHumReprod. 2020;50:101962.

2. Jean A. A briefhistory of artificial intelligence. Med Sci (Paris). 2020; 36(11):1059-1067. French

3. Nilsson N. Artificial intelligence, employment, andincome. HumanSystems Management. 1985;5:123–35.

4. Hashimoto DA, Ward TM, Meireles OR. The Role of Artificial Intelligence in Surgery. AdvSurg. 2020;54:89-101.

5. Hashimoto DA, Rosman G, Rus D, Meireles OR. Artificial Intelligence in Surgery: PromisesandPerils. Ann Surg. 2018;268(1):70-76.

6. Avanzolini G, Barbini P, Gnudi G. Unsupervisedlearningand discriminant analysisappliedtoidentification of highriskpostoperative cardiac patients. Int J Biomed Comput. 1990;25(2–3):207–21.

7. DiPietro R, Hager GD. UnsupervisedLearning for SurgicalMotionbyLearningtoPredicttheFuture. In 2018. p. 281–8.

8. Skinner B. The behavior of organisms: An experimental analysis. BF Skinner Foundation; 1990.

9. Hebb DO. The Organization of Behavior. Psychology Press; 2005.

10. Natarajan P, Frenzel JC, Smaltz DH. Demystifying Big Data andMachineLearning for Healthcare. Boca Raton: Taylor & Francis, 2017. CRC Press; 2017.

11. Esteva A, Robicquet A, Ramsundar B, Kuleshov V, DePristo M, Chou K, et al. A guidetodeeplearning in healthcare. Nat Med. 2019; 25(1): 24–9.

12. Nadkarni PM, Ohno-Machado L, Chapman WW. Natural languageprocessing: an introduction. J Am Med Inform Assoc. 2011; 18(5):544-51.

13. Zhou XY, Guo Y, Shen M, Yang GZ. Application of artificial intelligence in surgery. Front Med. 2020;14(4):417-430.

14. Rubinstein E, Salhov M, Nidam-Leshem M, White V, Golan S, Baniel J, et al. Unsupervised tumor detection in Dynamic PET/CT imaging of the prostate. Med Image Anal. 2019;55:27-40.

15. Winkels M, Cohen TS. Pulmonarynoduledetection in CT scanswithequivariantCNNs. Med Image Anal. 2019;55:15-26.

16. Maicas G, Carneiro G, Bradley AP, Nascimento JC, Reid I. Deep ReinforcementLearning for Active BreastLesionDetectionfrom DCE-MRI. In 2017. p. 665-73.

17. Lee H, Yune S, Mansouri M, Kim M, Tajmir SH, Guerrier CE, et al. An explainabledeep-learningalgorithm for thedetection of acute intracranialhaemorrhagefromsmalldatasets. Nat BiomedEng. 2018;3(3):173-82.

18. Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deeplearning in medical imageanalysis. Med Image Anal. 2017;42:60-88.

19. Khosravi P, Kazemi E, Imielinski M, Elemento O, Hajirasouliha I. Deep Convolutional Neural NetworksEnableDiscrimination of Heterogeneous Digital PathologyImages. EBioMedicine. 2018; 27:317-328.

20. Chilamkurthy S, Ghosh R, Tanamala S, Biviji M, Campeau NG, Venugopal VK, et al. Deep learningalgorithms for detection of criticalfindings in head CT scans: a retrospective study. Lancet. 2018;392(10162):2388-96.

21. Meyer A, Zverinski D, Pfahringer B, Kempfert J, Kuehne T, Sündermann SH, et al. Machinelearning for real-timeprediction of complications in critical care: a retrospective study. Lancet Respir Med. 2018;6(12):905-14.

22. Li X, Zhang S, Zhang Q, Wei X, Pan Y, Zhao J, et al. Diagnosis of thyroid cancer usingdeepconvolutional neural networkmodelsappliedtosonographicimages: a retrospective, multicohort, diagnostic study. LancetOncol. 2019;20(2):193-201.

23. Kamnitsas K, Ledig C, Newcombe VFJ, Simpson JP, Kane AD, Menon DK, et al. Efficientmulti-scale 3D CNN withfullyconnected CRF for accuratebrainlesionsegmentation. Med Image Anal. 2017; 36:61-78.

24. Longk J, Shelhamer E, Darrell T. Fullyconvolutionalnetworks for semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. Boston; 2015. p. 3431-40.

25. Ronnebergerk O, Fischer P, Brox T. U-Net: convolutionalnetworks for biomedical imagesegmentation. In: Proceedings of International Conference on Medical ImageComputingand Computer AssistedIntervention (MICCAI00). New York: Springer; 2015. p. 234–41.

26. Cicek O, Abdulkadir A, Lienkamp S, Brox T, Ronneberger O. 3D U-Net: learning dense volumetric segmentationfrom sparse annotation. In: Proceedings of International Conference on Medical ImageComputingand Computer-AssistedIntervention (MICCAI). New York: Springer; 2016. p. 424–32.

27. Zhou X, Yang G. Normalization in training U-Net for 2D biomedical semantic segmentation. IEEE Robot Autom Lett. 2019;4(2):1792-9.

28. Stacey D, Légaré F, Lewis K, Barry MJ, Bennett CL, Eden KB, et al. Decision aids for peoplefacinghealthtreatment or screening decisions. CochraneDatabaseSyst Rev. 2017;4(4):CD001431.

29. Knops AM, Legemate DA, Goossens A, Bossuyt PMM, Ubbink DT. Decision Aids for PatientsFacing a SurgicalTreatmentDecision. Ann Surg. 2013;257(5):860–6.

30. Singh PP, Zeng ISL, Srinivasa S, Lemanu DP, Connolly AB, Hill AG. Systematicreviewand meta-analysis of use of serum C-reactive proteinlevelstopredict anastomotic leakaftercolorectalsurgery. Br J Surg. 2014;101(4):339-46.

31. Pepys MB, Hirschfield GM, Tennent GA, Ruth Gallimore J, Kahan MC, Bellotti V, et al. Targeting C-reactive protein for thetreatment of cardiovascular disease. Nature. 2006;440(7088):1217–21.

32. Gage BF, van Walraven C, Pearce L, Hart RG, Koudstaal PJ, Boode BSP, et al. SelectingPatientsWithAtrialFibrillation for Anticoagulation. Circulation. 2004;110(16):2287–92.

33. Strate LL, Saltzman JR, Ookubo R, Mutinga ML, Syngal S. Validation of a ClinicalPredictionRule for Severe Acute Lower Intestinal Bleeding. Am J Gastroenterol. 2005;100(8):1821–7.

34. Vincent JL, Moreno R, Takala J, Willatts S, De Mendonça A, Bruining H, et al. The SOFA (Sepsis-related Organ FailureAssessment) scoretodescribe organ dysfunction/failure. Intensive Care Med. 1996; 22(7):707–10.

35. Dybowski R, Gant V, Weller P, Chang R. Prediction of outcome in criticallyillpatientsusing artificial neural networksynthesisedby genetic algorithm. The Lancet. 1996;347(9009):1146–50.

36. Kim S, Kim W, Park RW. A Comparison of Intensive Care Unit MortalityPredictionModelsthroughtheUse of Data MiningTechniques. Healthc Inform Res. 2011;17(4):232.

37. Bagnall NM, Pring ET, Malietzis G, Athanasiou T, Faiz OD, Kennedy RH, et al. Perioperativeriskprediction in the era of enhancedrecovery: a comparison of POSSUM, ACPGBI, and E-PASS scoringsystems in major surgicalprocedures of thecolorectalsurgeon. Int J Colorectal Dis. 2018;33(11):1627–34.

Full Text Sources:

Abstract:

Views: 2302

For Authors

Journal Subscriptions

Jun 2025

Supplements

Instructions for authors

Online submission

Contact

e-ISSN: 2601 - 1700 (online)

ISSN-L: 2559 - 723X

Journal Abbreviation: Surg. Gastroenterol. Oncol.

Surgery, Gastroenterology and Oncology (SGO) is indexed in:

- SCOPUS

- EBSCO

- DOI/Crossref

- Google Scholar

- SCImago

- Harvard Library

- Open Academic Journals Index (OAJI)

Surgery, Gastroenterology and Oncology (SGO) is an open-access, peer-reviewed online journal published by Celsius Publishing House. The journal allows readers to read, download, copy, distribute, print, search, or link to the full text of its articles.

Time to first editorial decision: 25 days

Rejection rate: 61%

CiteScore: 0.2

Meetings and Courses in 2025

Meetings and Courses in 2024

Meetings and Courses in 2023

Meetings and Courses in 2022

Meetings and Courses in 2021

Meetings and Courses in 2020

Meetings and Courses in 2019

Verona expert meeting 2019

Surgery, Gastroenterology and Oncology applies the Creative Commons Attribution Non Commercial (CC BY-NC 4.0) license, which permits readers to copy and redistribute the material in any medium or format, remix, adapt, build upon the published works non-commercially, and license the derivative works on different terms, provided the original material is properly cited and the use is non-commercial. Please see: https://creativecommons.org/licenses/by-nc/4.0/

Publisher’s Note:

The opinions, statements, and data contained in article are solely those of the authors and not of Surgery, Gastroenterology and Oncology journal or the editors. Publisher and the editors disclaim responsibility for any damage resulting from any ideas, instructions, methods, or products referred to in the content.

IASGO Society News

IASGO Society News